How To Run Openmp Program In Dev C%2b%2b

Question 1 (A serial C program):

- How To Run Openmp Program In Dev C 2b 2b 1

- How To Run Openmp Program In Dev C 2b 2b Answer

- How To Run Openmp Program In Dev C 2b 2b 1b

- How To Run Openmp Program In Dev C 2b 2b 2c

Suppose the run-time of a serial program is given by Tserial= n2, where the units of the run-time are in microseconds. Suppose that a parallelization of this program has run-time Tparallel= (n2/p) + log2(p). Write a program that finds the speedups and efficiencies of this program for various values of n and p. Run your program with n= 10, 20, 40, . . . ,320, and p= 1,2,4, . . .,128.

OpenMP requires compiler support. You can find a list of compilers off the OpenMP ARB web page. Currently on the upper left hand side of the page there is box labeled 'What's Here' and under it there is a header 'OpenMP Compilers'. I would like to use OpenMP to parallelize some of my functions in a c program. I am using ubuntu 12.04, on intel i5 with 4 cores. But after following certain steps, I do not see any improve in performance. I could see that only one CPU core is being used. (system monitor in ubuntu ) What I did. $ icc -o omphelloc -openmp omphello.c omphello.c(22): (col. 1) remark: OpenMP DEFINED REGION WAS PARALLELIZED. $ export OMPNUMTHREADS=3 $./omphelloc Hello World from thread = 0 Hello World from thread = 2 Hello World from thread = 1 Number of threads = 3 $ $ ifort -o omphellof -openmp omphello.f. OpenMP (www.openmp.org) makes writing the Multithreading code in C/C so easy. OpenMP is cross-platform can normally ben seen as an extenstion to the C/C, Fortran Compiler i.e. OpenMP hooks the compiler so that you can use the specification for a set of compiler directives, library routines, and environment variables in order to specify shared memory parallelism. Then I installed Intel C compiler. I am able to create Intel C compiler project on Visual Studio. But, when I create OpenMP program and try to run it, I get the following warning messages:Deleting intermediate files and output files for project 'openmptest1', configuration 'Debug Win32'.1Compiling with Intel C 11.1.048 IA-32.

Question 2: (MPI)

Question 3 :(PThread)

Write a parallel program using pthread to accomplish the same as the following serial program. For the following serial program, the user enters a line and the program outputs the number of occurrences of each character.For the parallel program if we have n threads we should divide the line into n segments and let each thread counts the characters of its segment.Note: Do not count the space or the tab characters.

Question 4 (OpenMP):

Write a program using OpenMP to hire 8 threads to accomplish the following:

a) Declare a shared array: a of type integer of size 800.

b) Declare a shared array: b of type integer of size 8.

c) Generates 800 random numbers in the range 0 to 50,000 inclusive and stores them in array: a.

d) The 8 threads with the ranks 0, 1, 2, 3, 4, 5, 6, 7 find the biggest number of array: a, from indices 0 to 99, 100 to 199, 200, 299, 300 to 399, 400 to 499, 500 to 599, 600 to 699, 700 to 799 and store them in b[0], b[1], b[2], b[3], b[4], b[5], b[6], and b[7] respectively.

d) The function: main displays the biggest number of array: b.

Hp support assistant silent installer.

- Tim Mattson, lectures on YouTube

- Further reading at openmp.org: intro

OpenMP in a nutshell

OpenMP is a library for parallel programming in the SMP (symmetric multi-processors, or shared-memory processors) model. When programming with OpenMP, all threads share memory and data. OpenMP supports C, C++ and Fortran. The OpenMP functions are included in a header file called omp.h .OpenMP program structure: An OpenMP program has sections that are sequential and sections that are parallel. In general an OpenMP program starts with a sequential section in which it sets up the environment, initializes the variables, and so on.

When run, an OpenMP program will use one thread (in the sequential sections), and several threads (in the parallel sections).

There is one thread that runs from the beginning to the end, and it's called the master thread. The parallel sections of the program will cause additional threads to fork. These are called the slave threads.

A section of code that is to be executed in parallel is marked by a special directive (omp pragma). When the execution reaches a parallel section (marked by omp pragma), this directive will cause slave threads to form. Each thread executes the parallel section of the code independently. When a thread finishes, it joins the master. When all threads finish, the master continues with code following the parallel section.

Each thread has an ID attached to it that can be obtained using a runtime library function (called omp_get_thread_num()). The ID of the master thread is 0.

Why OpenMP? More efficient, and lower-level parallel code is possible, however OpenMP hides the low-level details and allows the programmer to describe the parallel code with high-level constructs, which is as simple as it can get.

OpenMP has directives that allow the programmer to:

- specify the parallel region

- specify whether the variables in the parallel section are private or shared

- specify how/if the threads are synchronized

- specify how to parallelize loops

- specify how the works is divided between threads (scheduling)

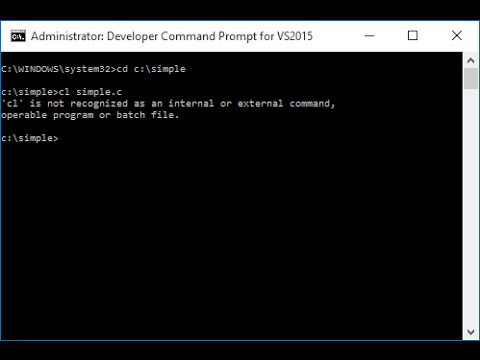

Compiling and running OpenMP code

The OpenMP functions are included in a header file called omp.h . The public linux machines dover and foxcroft have gcc/g++ installed with OpenMP support. All you need to do is use the -fopenmp flag on the command line:It’s also pretty easy to get OpenMP to work on a Mac. A quick search with google reveals that the native apple compiler clang is installed without openmp support. When you installed gcc it probably got installed without openmp support. To test, go to the terminal and try to compile something: If you get an error message saying that “omp.h” is unknown, that mans your compiler does not have openmp support. Here’s what I did:

1. I installed Homebrew, the missing package manager for MacOS, http://brew.sh/index.html 2. Then I asked brew to install gcc: 3. Then type ‘gcc’ and press tab; it will complete with all the versions of gcc installed: 4. The obvious guess here is that gcc-6 is the latest version, so I use it to compile: Works!

Specifying the parallel region (creating threads)

The basic directive is: When the master thread reaches this line, it forks additional threads to carry out the work enclosed in the block following the #pragma construct. The block is executed by all threads in parallel. The original thread will be denoted as master thread with thread-id 0.Example (C program): Display 'Hello, world.' using multiple threads. Use flag -fopenmp to compile using gcc: Output on a computer with two cores, and thus two threads: On dover, I got 24 hellos, for 24 threads. On my desktop I get (only) 8. How many do you get?

Note that the threads are all writing to the standard output, and there is a race to share it. The way the threads are interleaved is completely arbitrary, and you can get garbled output:

Private and shared variables

In a parallel section variables can be private or shared:- private: the variable is private to each thread, which means each thread will have its own local copy. A private variable is not initialized and the value is not maintained for use outside the parallel region. By default, the loop iteration counters in the OpenMP loop constructs are private.

- shared: the variable is shared, which means it is visible to and accessible by all threads simultaneously. By default, all variables in the work sharing region are shared except the loop iteration counter. Shared variables must be used with care because they cause race conditions.

The type of the variable, private or shared, is specified following the #pragma omp:

Example: Private or shared? Sometimes your algorithm will require sharing variables, other times it will require private variables. The caveat with sharing is the race conditions. The task of thinking through the details of a parallel algorithm and specifying the type of the variables is on, of course, the programmer.

Synchronization

OpenMP lets you specify how to synchronize the threads. Here’s what’s available:- critical: the enclosed code block will be executed by only one thread at a time, and not simultaneously executed by multiple threads. It is often used to protect shared data from race conditions.

- atomic: the memory update (write, or read-modify-write) in the next instruction will be performed atomically. It does not make the entire statement atomic; only the memory update is atomic. A compiler might use special hardware instructions for better performance than when using critical.

- ordered: the structured block is executed in the order in which iterations would be executed in a sequential loop

- barrier: each thread waits until all of the other threads of a team have reached this point. A work-sharing construct has an implicit barrier synchronization at the end.

- nowait: specifies that threads completing assigned work can proceed without waiting for all threads in the team to finish. In the absence of this clause, threads encounter a barrier synchronization at the end of the work sharing construct.

Barrier example: Note above the function omp_get_num_threads(). Can you guess what it’s doing? Some other runtime functions are:

- omp_get_num_threads

- omp_get_num_procs

- omp_set_num_threads

- omp_get_max_threads

Parallelizing loops

Parallelizing loops with OpenMP is straightforward. One simply denotes the loop to be parallelized and a few parameters, and OpenMP takes care of the rest. Can't be easier!The directive is called a work-sharing construct, and must be placed inside a parallel section: The “#pragma omp for” distributes the loop among the threads. It must be used inside a parallel block: Example: Another example (here): adding all elements in an array. There exists also a “parallel for” directive which combines a parallel and a for (no need to nest a for inside a parallel): Exactly how the iterations are assigned to ecah thread, that is specified by the schedule (see below). Note:Since variable i is declared inside the parallel for, each thread will have its own private version of i.

How To Run Openmp Program In Dev C 2b 2b 1

Loop scheduling

OpenMP lets you control how the threads are scheduled. The type of schedule available are:- static: Each thread is assigned a chunk of iterations in fixed fashion (round robin). The iterations are divided among threads equally. Specifying an integer for the parameter chunk will allocate chunk number of contiguous iterations to a particular thread. Note: is this the default? check.

- dynamic: Each thread is initialized with a chunk of threads, then as each thread completes its iterations, it gets assigned the next set of iterations. The parameter chunk defines the number of contiguous iterations that are allocated to a thread at a time.

- guided: Iterations are divided into pieces that successively decrease exponentially, with chunk being the smallest size.

More complex directives

..which you probably won't need.- can define “sections” inside a parallel block

- can request that iterations of a loop are executed in order

- specify a block to be executed only by the master thread

- specify a block to be executed only by the first thread that reaches it

- define a section to be “critical”: will be executed by each thread, but can be executed only by a single thread at a time. This forces threads to take turns, not interrupt each other.

- define a section to be “atomic”: this forces threads to write to a shared memory location in a serial manner to avoid race conditions

Performance considerations

Critical sections and atomic sections serialize the execution and eliminate the concurrent execution of threads. If used unwisely, OpenMP code can be worse than serial code because of all the thread overhead.How To Run Openmp Program In Dev C 2b 2b Answer

Some comments

How To Run Openmp Program In Dev C 2b 2b 1b

OpenMP is not magic. A loop must be obviously parallelizable in order for OpenMP to unroll it and facilitate the assignment of iterations among threads. If there are any data dependencies from one iteration to the next, then OpenMP can't parallelize it.How To Run Openmp Program In Dev C 2b 2b 2c

The for loop cannot exit early, for example: Values of the loop control expressions must be the same for all iterations of the loop. For example: